Bayes benefits your research

Stefan Wiens

2022-09-05

context

maven

- “A Maven is a trusted expert in a field who passes on knowledge to others.” (Sharpe 2013)

- Zoltan Dienes

- Eric-Jan Wagenmakers

- own attempt (Wiens and Nilsson 2017)

common approaches

Frequentist

- significance test (p < .05)

- confidence interval

Bayesian

- Bayes factor (BF > 3)

- credible interval

- which do you use in your research?

- do you have concerns about your approach?

- p < .05 (Cohen 1994; Gigerenzer 2004)

- confidence intervals (Hoekstra et al. 2014; Morey et al. 2016)

- general (Dienes 2008)

goal

hypothesis testing

- significance test (p < .05)

- Bayes factor (BF > 3)

estimation

- confidence interval

- credible interval

example

tiredness symptoms after listening to my talk

- hypothesis testing approach

- Tiredness was significantly lower in the treatment than control group (p < .05).

- estimation approach

- Tiredness was lower for treatment than control; mean difference was -2.3, 95%CI [-4.5, -0.1].

hypothesis testing

- hypothesis testing: does effect exist?

- estimation: how big is effect?

- demonstrate existence first (Wagenmakers, Marsman, et al. 2018)

- tooth fairy science

![]()

- example: ESP (Wagenmakers et al. 2011)

- tooth fairy science

- advice:

- Bayesian analyses can be very time consuming

- If effect probably exists, go with estimation!

frequentist hypothesis testing

- NHST (null hypothesis significance testing)

- H0: no effect (nill effect)

- H1: there is an effect (it is not nill)

- If we assume that there is no effect, how likely are the data (or more extreme data)

- Data are very unlikely (p < .05)

- We reject the idea that there is no effect (reject H0)

- We conclude that there is an effect (accept H1)

Sally Clark

- Her two children died briefly after birth

- defense: SIDS

- prosecution: murder

- “1 in 73 million” that 2 children would die of SIDS

- NHST interpretation

- H0 = SIDS

- data are too unlikely: p < 0.05

- reject H0 (no SIDS)

- accept H1 (conclude murder)

Royal statistical society

“The jury needs to weigh up two competing explanations. Two deaths by murder may well be even more unlikely” (Green, 2002)

- own calculation for Sweden (some years old)

- SIDS: 1 in 6000

- child murder: 0.036 in 6000

- Bayesian: compare explanations; which is better?

- Frequentist: looks only at H0

NHST = hypothesis myopia

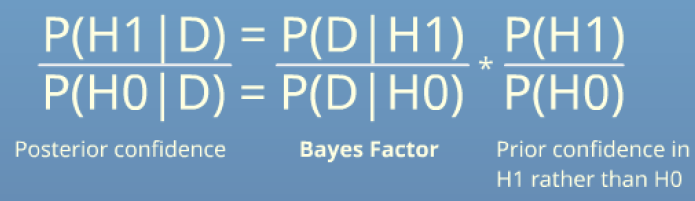

Bayesian hypothesis testing

compares explanations (models)

example: H0 versus H1

Bayesian whodunnit

Whodunnit example

Bayes Factor

- BF10: How much better explanation is H1 than H0?

- BF01: How much better explanation is H0 than H1?

- BF10 = 1: H0 and H1 explain equally well

- BF10 < 1: H0 explains better

- BF10 > 1: H1 explains better

- BF is continuous measure of evidence

model comparison

Results depend on the question

“It is sometimes considered a paradox that the answer depends not only on the observations but also on the question; it should be a platitude.” (Jeffreys, 1939)

model vagueness

Bayesian analyses punish vagueness (Wagenmakers 2020)

standardization of effect sizes

“Being so disinterested in our variables that we do not care about their units can hardly be desirable.” (Tukey, 1969)

- raw effect sizes

- Zoltan Dienes

- Wiens (2017)

- standardized effect sizes

- JASP works with standardized effect sizes

- JASP example: Adam Sandler (Wagenmakers, Morey, and Lee 2016)

next stop Bayes

- open science (Spellman, Gilbert, and Corker 2018)

- literate programming (Knuth 1984)

- easily done in R with Quarto

- or Python

- many packages in R

- LMM easier to fit with Bayesian approach

- but time consuming!

discussion

thank you for your time!

references

Cohen, Jacob. 1994. “The Earth Is Round (p \(<\) .05).” American Psychologist 49 (12): 997–1003. https://doi.org/10.1037/0003-066X.49.12.997.

Dienes, Zoltan. 2008. Understanding Psychology as a Science: An Introduction to Scientific and Statistical Inference. Basingstoke: Palgrave Macmillan.

———. 2016. “How Bayes Factors Change Scientific Practice.” Journal of Mathematical Psychology, Bayes factors for testing hypotheses in psychological research: Practical relevance and new developments, 72 (June): 78–89. https://doi.org/10.1016/j.jmp.2015.10.003.

Dienes, Zoltan, and Neil McLatchie. 2018. “Four Reasons to Prefer Bayesian Analyses over Significance Testing.” Psychonomic Bulletin & Review 25 (1): 207–18. https://doi.org/10.3758/s13423-017-1266-z.

Gigerenzer, Gerd. 2004. “Mindless Statistics.” The Journal of Socio-Economics 33 (5): 587–606. https://doi.org/10.1016/j.socec.2004.09.033.

Hoekstra, Rink, Richard D. Morey, Jeffrey N. Rouder, and Eric-Jan Wagenmakers. 2014. “Robust Misinterpretation of Confidence Intervals.” Psychonomic Bulletin & Review 21 (5): 1157–64. https://doi.org/10.3758/s13423-013-0572-3.

Knuth, D. E. 1984. “Literate Programming.” The Computer Journal 27 (2): 97–111. https://doi.org/10.1093/comjnl/27.2.97.

Morey, Richard D., Rink Hoekstra, Jeffrey N. Rouder, Michael D. Lee, and Eric-Jan Wagenmakers. 2016. “The Fallacy of Placing Confidence in Confidence Intervals.” Psychonomic Bulletin & Review 23 (1): 103–23. https://doi.org/10.3758/s13423-015-0947-8.

Sharpe, Donald. 2013. “Why the Resistance to Statistical Innovations? Bridging the Communication Gap.” Psychological Methods 18 (4): 572–82. https://doi.org/10.1037/a0034177.

Spellman, Barbara A., Elizabeth A. Gilbert, and Katherine S. Corker. 2018. “Open Science.” In, edited by John T. Wixted, 1–47. Hoboken, NJ, USA: John Wiley & Sons, Inc. https://doi.org/10.1002/9781119170174.epcn519.

Wagenmakers, Eric-Jan. 2020. “Bayesian Thinking for Toddlers.” https://doi.org/10.31234/osf.io/w5vbp.

Wagenmakers, Eric-Jan, Jonathon Love, Maarten Marsman, Tahira Jamil, Alexander Ly, Josine Verhagen, Ravi Selker, et al. 2018. “Bayesian Inference for Psychology. Part II: Example Applications with JASP.” Psychonomic Bulletin & Review 25 (1): 58–76. https://doi.org/10.3758/s13423-017-1323-7.

Wagenmakers, Eric-Jan, Maarten Marsman, Tahira Jamil, Alexander Ly, Josine Verhagen, Jonathon Love, Ravi Selker, et al. 2018. “Bayesian Inference for Psychology. Part I: Theoretical Advantages and Practical Ramifications.” Psychonomic Bulletin & Review 25 (1): 35–57. https://doi.org/10.3758/s13423-017-1343-3.

Wagenmakers, Eric-Jan, Richard D. Morey, and Michael D. Lee. 2016. “Bayesian Benefits for the Pragmatic Researcher.” Current Directions in Psychological Science 25 (3): 169–76. https://doi.org/10.1177/0963721416643289.

Wagenmakers, Eric-Jan, Ruud Wetzels, Denny Borsboom, and Han L. J. van der Maas. 2011. “Why Psychologists Must Change the Way They Analyze Their Data: The Case of Psi: Comment on Bem (2011).” Journal of Personality and Social Psychology 100 (3): 426–32. https://doi.org/10.1037/a0022790.

Wiens, Stefan. 2017. “Aladins Bayes Factor in r.” https://doi.org/10.17045/sthlmuni.4981154.v3.

Wiens, Stefan, and Mats E. Nilsson. 2017. “Performing Contrast Analysis in Factorial Designs: From NHST to Confidence Intervals and Beyond.” Educational and Psychological Measurement 77 (4): 690–715. https://doi.org/10.1177/0013164416668950.